Vertical Integration In A Sentence

The spectral density of a fluorescent light as a function of optical wavelength shows peaks at atomic transitions, indicated by the numbered arrows.

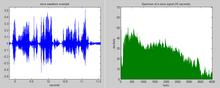

The voice waveform over time (left) has a broad audio ability spectrum (right).

The power spectrum of a time series describes the distribution of power into frequency components composing that betoken.[one] According to Fourier analysis, whatsoever physical signal tin exist decomposed into a number of detached frequencies, or a spectrum of frequencies over a continuous range. The statistical average of a certain betoken or sort of indicate (including noise) as analyzed in terms of its frequency content, is called its spectrum.

When the energy of the point is concentrated around a finite time interval, especially if its total free energy is finite, one may compute the free energy spectral density. More usually used is the power spectral density (or simply power spectrum), which applies to signals existing over all time, or over a time period big enough (especially in relation to the duration of a measurement) that it could besides accept been over an infinite time interval. The ability spectral density (PSD) then refers to the spectral free energy distribution that would exist found per unit time, since the total energy of such a bespeak over all time would mostly be infinite. Summation or integration of the spectral components yields the total power (for a physical process) or variance (in a statistical process), identical to what would exist obtained past integrating over the fourth dimension domain, as dictated by Parseval's theorem.[one]

The spectrum of a physical process often contains essential information well-nigh the nature of . For instance, the pitch and timbre of a musical musical instrument are immediately determined from a spectral assay. The color of a low-cal source is adamant by the spectrum of the electromagnetic wave's electric field as it fluctuates at an extremely high frequency. Obtaining a spectrum from time serial such equally these involves the Fourier transform, and generalizations based on Fourier analysis. In many cases the time domain is not specifically employed in practice, such every bit when a dispersive prism is used to obtain a spectrum of light in a spectrograph, or when a sound is perceived through its upshot on the auditory receptors of the inner ear, each of which is sensitive to a particular frequency.

However this article concentrates on situations in which the time series is known (at least in a statistical sense) or directly measured (such as past a microphone sampled by a figurer). The power spectrum is important in statistical signal processing and in the statistical study of stochastic processes, every bit well equally in many other branches of physics and engineering. Typically the process is a function of time, but i tin can similarly discuss data in the spatial domain being decomposed in terms of spatial frequency.[1]

Caption [edit]

Any signal that can exist represented as a variable that varies in fourth dimension has a corresponding frequency spectrum. This includes familiar entities such as visible light (perceived as colour), musical notes (perceived as pitch), radio/Idiot box (specified by their frequency, or sometimes wavelength) and even the regular rotation of the earth. When these signals are viewed in the form of a frequency spectrum, sure aspects of the received signals or the underlying processes producing them are revealed. In some cases the frequency spectrum may include a distinct peak respective to a sine wave component. And additionally there may be peaks corresponding to harmonics of a cardinal summit, indicating a periodic signal which is not simply sinusoidal. Or a continuous spectrum may show narrow frequency intervals which are strongly enhanced corresponding to resonances, or frequency intervals containing almost zero power equally would be produced by a notch filter.

In physics, the indicate might be a wave, such as an electromagnetic wave, an audio-visual wave, or the vibration of a machinery. The power spectral density (PSD) of the signal describes the power present in the indicate every bit a function of frequency, per unit frequency. Power spectral density is commonly expressed in watts per hertz (W/Hz).[2]

When a signal is defined in terms just of a voltage, for instance, there is no unique ability associated with the stated amplitude. In this case "power" is simply reckoned in terms of the square of the signal, as this would always be proportional to the actual power delivered past that betoken into a given impedance. So i might use units of V2 Hz−1 for the PSD. Free energy spectral density (ESD) would take units would be V2 s Hz−i, since free energy has units of ability multiplied by fourth dimension (e.yard., watt-hour).[3]

In the full general case, the units of PSD will exist the ratio of units of variance per unit of frequency; so, for example, a series of displacement values (in meters) over time (in seconds) will take PSD in units of meters squared per hertz, mii/Hz. In the analysis of random vibrations, units of one thousand 2 Hz−1 are ofttimes used for the PSD of dispatch, where g denotes the thou-strength.[4]

Mathematically, it is non necessary to assign physical dimensions to the signal or to the independent variable. In the following give-and-take the significant of x(t) will remain unspecified, only the independent variable will be assumed to be that of time.

Definition [edit]

Energy spectral density [edit]

Free energy spectral density describes how the energy of a signal or a time series is distributed with frequency. Here, the term energy is used in the generalized sense of signal processing;[5] that is, the energy of a signal is:

The energy spectral density is most suitable for transients—that is, pulse-like signals—having a finite total energy. Finite or not, Parseval's theorem[6] (or Plancherel's theorem) gives the states an alternate expression for the free energy of the signal:

where:

is the value of the Fourier transform of at frequency (in Hz). The theorem also holds true in the discrete-time cases. Since the integral on the right-hand side is the free energy of the signal, the value of tin be interpreted as a density function multiplied by an infinitesimally small frequency interval, describing the energy contained in the signal at frequency in the frequency interval .

Therefore, the energy spectral density of is defined as: [6]

|

| (Eq.1) |

The part and the autocorrelation of form a Fourier transform pair, a result is known as Wiener–Khinchin theorem (run into also Periodogram).

Equally a physical example of how 1 might mensurate the energy spectral density of a signal, suppose represents the potential (in volts) of an electrical pulse propagating along a transmission line of impedance , and suppose the line is terminated with a matched resistor (so that all of the pulse energy is delivered to the resistor and none is reflected back). By Ohm's constabulary, the power delivered to the resistor at fourth dimension is equal to , so the full energy is found by integrating with respect to time over the duration of the pulse. To find the value of the free energy spectral density at frequency , one could insert between the transmission line and the resistor a bandpass filter which passes only a narrow range of frequencies ( , say) near the frequency of interest and then measure the total free energy dissipated across the resistor. The value of the energy spectral density at is and so estimated to be . In this example, since the power has units of Five2 Ω−1, the energy has units of Vii s Ω−1 = J, and hence the gauge of the energy spectral density has units of J Hz−1, as required. In many situations, it is mutual to forget the step of dividing past so that the energy spectral density instead has units of Vii Hz−i.

This definition generalizes in a straightforward mode to a discrete signal with a countably infinite number of values such every bit a signal sampled at detached times :

where is the detached-fourth dimension Fourier transform of The sampling interval is needed to keep the correct physical units and to ensure that nosotros recover the continuous case in the limit Just in the mathematical sciences the interval is ofttimes ready to 1, which simplifies the results at the expense of generality. (as well encounter normalized frequency)

Power spectral density [edit]

The above definition of energy spectral density is suitable for transients (pulse-like signals) whose energy is concentrated around 1 time window; then the Fourier transforms of the signals generally exist. For continuous signals over all time, i must rather define the power spectral density (PSD) which exists for stationary processes; this describes how ability of a betoken or fourth dimension series is distributed over frequency, every bit in the elementary example given previously. Here, ability can be the actual physical power, or more ofttimes, for convenience with abstract signals, is just identified with the squared value of the indicate. For example, statisticians study the variance of a function over time (or over some other contained variable), and using an analogy with electrical signals (amongst other physical processes), it is customary to refer to it as the power spectrum fifty-fifty when in that location is no physical power involved. If 1 were to create a concrete voltage source which followed and applied information technology to the terminals of a one ohm resistor, then indeed the instantaneous ability dissipated in that resistor would be given by watts.

The average power of a signal over all time is therefore given by the following time average, where the flow is centered well-nigh some arbitrary time :

All the same, for the sake of dealing with the math that follows, it is more convenient to deal with time limits in the signal itself rather than time limits in the bounds of the integral. As such, we have an alternative representation of the average power, where and is unity inside the arbitrary flow and goose egg elsewhere.

Conspicuously in cases where the to a higher place expression for P is not-null (even every bit T grows without bound) the integral itself must likewise abound without bound. That is the reason that we cannot use the energy spectral density itself, which is that diverging integral, in such cases.

In analyzing the frequency content of the signal , one might like to compute the ordinary Fourier transform ; still, for many signals of interest the Fourier transform does non formally be.[N 1] Regardless, Parseval'south theorem tells us that we can re-write the average ability as follows.

So the ability spectral density is simply defined as the integrand above.[eight] [ix]

|

| (Eq.2) |

From here, we can also view as the Fourier transform of the time convolution of and

Now, if nosotros divide the fourth dimension convolution above by the period and take the limit as , it becomes the autocorrelation function of the non-windowed indicate , which is denoted as , provided that is ergodic, which is true in most, just non all, applied cases.[10].

From here we encounter, again assuming the ergodicity of , that the power spectral density can be plant equally the Fourier transform of the autocorrelation function (Wiener–Khinchin theorem).

|

| (Eq.iii) |

Many authors employ this equality to really define the power spectral density.[11]

The ability of the signal in a given frequency ring , where , tin be calculated by integrating over frequency. Since , an equal corporeality of power can be attributed to positive and negative frequency bands, which accounts for the factor of 2 in the post-obit course (such trivial factors depend on the conventions used):

More generally, like techniques may be used to estimate a time-varying spectral density. In this case the fourth dimension interval is finite rather than approaching infinity. This results in decreased spectral coverage and resolution since frequencies of less than are not sampled, and results at frequencies which are not an integer multiple of are not contained. But using a single such time series, the estimated power spectrum volition be very "noisy"; notwithstanding this can be alleviated if it is possible to evaluate the expected value (in the above equation) using a large (or space) number of short-term spectra respective to statistical ensembles of realizations of evaluated over the specified time window.

But equally with the energy spectral density, the definition of the power spectral density can exist generalized to discrete fourth dimension variables . As before, we can consider a window of with the point sampled at discrete times for a total measurement period .

Note that a single estimate of the PSD can exist obtained through a finite number of samplings. As before, the actual PSD is achieved when (and thus ) approaches infinity and the expected value is formally applied. In a real-earth application, one would typically average a finite-measurement PSD over many trials to obtain a more than authentic judge of the theoretical PSD of the concrete process underlying the individual measurements. This computed PSD is sometimes called a periodogram. This periodogram converges to the true PSD as the number of estimates as well equally the averaging time interval approach infinity (Brownish & Hwang).[12]

If two signals both possess power spectral densities, so the cantankerous-spectral density tin can similarly exist calculated; equally the PSD is related to the autocorrelation, so is the cross-spectral density related to the cross-correlation.

Backdrop of the power spectral density [edit]

Some properties of the PSD include:[13]

Cantankerous power spectral density [edit]

Given two signals and , each of which possess power spectral densities and , it is possible to define a cross power spectral density (CPSD) or cross spectral density (CSD). To begin, let the states consider the average power of such a combined signal.

Using the same annotation and methods every bit used for the power spectral density derivation, we exploit Parseval'due south theorem and obtain

where, once more, the contributions of and are already understood. Annotation that , and so the full contribution to the cross power is, by and large, from twice the existent part of either individual CPSD. Just as earlier, from here nosotros recast these products as the Fourier transform of a time convolution, which when divided past the period and taken to the limit becomes the Fourier transform of a cross-correlation function.[15]

where is the cross-correlation of with and is the cross-correlation of with . In light of this, the PSD is seen to exist a special instance of the CSD for . For the case that and are voltage or electric current signals, their Fourier transforms and are strictly positive by convention. Therefore, in typical signal processing, the full CPSD is only one of the CPSDs scaled by a gene of two.

For discrete signals 10n and yn , the relationship between the cross-spectral density and the cross-covariance is

Estimation [edit]

The goal of spectral density estimation is to estimate the spectral density of a random signal from a sequence of time samples. Depending on what is known about the signal, estimation techniques can involve parametric or non-parametric approaches, and may exist based on time-domain or frequency-domain analysis. For example, a common parametric technique involves fitting the observations to an autoregressive model. A mutual non-parametric technique is the periodogram.

The spectral density is usually estimated using Fourier transform methods (such every bit the Welch method), simply other techniques such every bit the maximum entropy method can also be used.

[edit]

- The spectral centroid of a signal is the midpoint of its spectral density role, i.east. the frequency that divides the distribution into two equal parts.

- The spectral border frequency (SEF), usually expressed every bit "SEF x", represents the frequency below which ten percent of the full ability of a given signal are located; typically, x is in the range 75 to 95. It is more particularly a popular measure used in EEG monitoring, in which instance SEF has variously been used to estimate the depth of anesthesia and stages of sleep.[16] [17] [18]

- A spectral envelope is the envelope bend of the spectrum density. It describes 1 betoken in time (one window, to exist precise). For case, in remote sensing using a spectrometer, the spectral envelope of a feature is the boundary of its spectral backdrop, as defined past the range of brightness levels in each of the spectral bands of interest.[19]

- The spectral density is a part of frequency, non a office of time. However, the spectral density of a modest window of a longer indicate may be calculated, and plotted versus time associated with the window. Such a graph is called a spectrogram. This is the basis of a number of spectral analysis techniques such every bit the short-fourth dimension Fourier transform and wavelets.

- A "spectrum" generally means the power spectral density, as discussed above, which depicts the distribution of signal content over frequency. For transfer functions (e.g., Bode plot, chirp) the consummate frequency response may exist graphed in ii parts: power versus frequency and phase versus frequency—the phase spectral density, phase spectrum, or spectral phase. Less usually, the two parts may be the real and imaginary parts of the transfer function. This is not to be confused with the frequency response of a transfer role, which besides includes a phase (or equivalently, a real and imaginary part) equally a role of frequency. The time-domain impulse response cannot generally exist uniquely recovered from the power spectral density alone without the stage part. Although these are also Fourier transform pairs, there is no symmetry (as there is for the autocorrelation) forcing the Fourier transform to be real-valued. See Ultrashort pulse#Spectral phase, stage noise, group delay.

- Sometimes one encounters an amplitude spectral density (ASD), which is the square root of the PSD; the ASD of a voltage signal has units of 5 Hz−i/2.[20] This is useful when the shape of the spectrum is rather constant, since variations in the ASD will then exist proportional to variations in the signal's voltage level itself. But it is mathematically preferred to use the PSD, since merely in that case is the expanse under the curve meaningful in terms of actual power over all frequency or over a specified bandwidth.

Applications [edit]

Electric engineering [edit]

Spectrogram of an FM radio signal with frequency on the horizontal axis and fourth dimension increasing upwardly on the vertical axis.

The concept and utilise of the ability spectrum of a signal is fundamental in electrical engineering science, particularly in electronic communication systems, including radio communications, radars, and related systems, plus passive remote sensing technology. Electronic instruments chosen spectrum analyzers are used to detect and measure the power spectra of signals.

The spectrum analyzer measures the magnitude of the short-time Fourier transform (STFT) of an input signal. If the signal being analyzed can be considered a stationary process, the STFT is a proficient smoothed guess of its ability spectral density.

Cosmology [edit]

Primordial fluctuations, density variations in the early universe, are quantified by a power spectrum which gives the power of the variations equally a function of spatial scale.

Climate Science [edit]

Power spectral-analysis take been used to examine the spatial structures for climate enquiry.[21] These results suggests atmospheric turbulence link climate alter to more local regional volatility in weather conditions.[22]

Run across also [edit]

- Bispectrum

- Brightness temperature

- Colors of noise

- To the lowest degree-squares spectral assay

- Noise spectral density

- Spectral density estimation

- Spectral efficiency

- Spectral leakage

- Spectral power distribution

- Whittle likelihood

- Window function

Notes [edit]

References [edit]

- ^ a b c P Stoica & R Moses (2005). "Spectral Analysis of Signals" (PDF).

- ^ Gérard Maral (2003). VSAT Networks. John Wiley and Sons. ISBN978-0-470-86684-9.

- ^ Michael Peter Norton & Denis One thousand. Karczub (2003). Fundamentals of Noise and Vibration Analysis for Engineers. Cambridge University Press. ISBN978-0-521-49913-two.

- ^ Alessandro Birolini (2007). Reliability Engineering. Springer. p. 83. ISBN978-three-540-49388-4.

- ^ Oppenheim; Verghese. Signals, Systems, and Inference. pp. 32–4.

- ^ a b Stein, Jonathan Y. (2000). Digital Point Processing: A Calculator Science Perspective. Wiley. p. 115.

- ^ Hannes Risken (1996). The Fokker–Planck Equation: Methods of Solution and Applications (2nd ed.). Springer. p. 30. ISBN9783540615309.

- ^ Fred Rieke; William Bialek & David Warland (1999). Spikes: Exploring the Neural Code (Computational Neuroscience). MIT Press. ISBN978-0262681087.

- ^ Scott Millers & Donald Childers (2012). Probability and random processes. Academic Press. pp. 370–5.

- ^ The Wiener–Khinchin theorem makes sense of this formula for any broad-sense stationary procedure under weaker hypotheses: does not demand to exist admittedly integrable, it only needs to be. Merely the integral tin can no longer exist interpreted as usual. The formula besides makes sense if interpreted equally involving distributions (in the sense of Laurent Schwartz, non in the sense of a statistical Cumulative distribution office) instead of functions. If is continuous, Bochner's theorem can be used to prove that its Fourier transform exists equally a positive measure out, whose distribution function is F (but not necessarily equally a function and non necessarily possessing a probability density).

- ^ Dennis Ward Ricker (2003). Echo Point Processing. Springer. ISBN978-1-4020-7395-3.

- ^ Robert Grover Chocolate-brown & Patrick Y.C. Hwang (1997). Introduction to Random Signals and Practical Kalman Filtering. John Wiley & Sons. ISBN978-0-471-12839-7.

- ^ Von Storch, H.; Zwiers, F. W. (2001). Statistical analysis in climate inquiry. Cambridge University Press. ISBN978-0-521-01230-0.

- ^ An Introduction to the Theory of Random Signals and Noise, Wilbur B. Davenport and Willian L. Root, IEEE Press, New York, 1987, ISBN 0-87942-235-1

- ^ William D Penny (2009). "Signal Processing Course, chapter 7".

- ^ Iranmanesh, Saam; Rodriguez-Villegas, Esther (2017). "An Ultralow-Power Sleep Spindle Detection System on Chip". IEEE Transactions on Biomedical Circuits and Systems. eleven (4): 858–866. doi:10.1109/TBCAS.2017.2690908. hdl:10044/1/46059. PMID 28541914.

- ^ Imtiaz, Syed Anas; Rodriguez-Villegas, Esther (2014). "A Low Computational Toll Algorithm for REM Sleep Detection Using Single Channel EEG". Annals of Biomedical Engineering science. 42 (xi): 2344–59. doi:10.1007/s10439-014-1085-6. PMC4204008. PMID 25113231.

- ^ Drummond JC, Brann CA, Perkins DE, Wolfe DE: "A comparison of median frequency, spectral edge frequency, a frequency band power ratio, total power, and authorisation shift in the conclusion of depth of anesthesia," Acta Anaesthesiol. Scand. 1991 Nov;35(8):693-9.

- ^ Swartz, Diemo (1998). "Spectral Envelopes". [i].

- ^ Michael Cerna & Audrey F. Harvey (2000). "The Fundamentals of FFT-Based Signal Analysis and Measurement" (PDF).

- ^ Communication, N. B. I. (2022-05-23). "Danish astrophysics pupil discovers link between global warming and locally unstable atmospheric condition". nbi.ku.dk . Retrieved 2022-07-23 .

- ^ Sneppen, Albert (2022-05-05). "The power spectrum of climatic change". The European Physical Journal Plus. 137 (v): 555. arXiv:2205.07908. Bibcode:2022EPJP..137..555S. doi:10.1140/epjp/s13360-022-02773-west. ISSN 2190-5444. S2CID 248652864.

External links [edit]

- Power Spectral Density Matlab scripts

Vertical Integration In A Sentence,

Source: https://en.wikipedia.org/wiki/Spectral_density

Posted by: smithcoctur.blogspot.com

![{\displaystyle \left|{\hat {x}}_{T}(f)\right|^{2}={\mathcal {F}}\left\{x_{T}^{*}(-t)\mathbin {\mathbf {*} } x_{T}(t)\right\}=\int _{-\infty }^{\infty }\left[\int _{-\infty }^{\infty }x_{T}^{*}(t-\tau )x_{T}(t)dt\right]e^{-i2\pi f\tau }\ d\tau }](https://wikimedia.org/api/rest_v1/media/math/render/svg/bd30e832ba7df7f30cdbd74043c8209d661f4b30)

![{\displaystyle \lim _{T\to \infty }{\frac {1}{T}}\left|{\hat {x}}_{T}(f)\right|^{2}=\int _{-\infty }^{\infty }\left[\lim _{T\to \infty }{\frac {1}{T}}\int _{-\infty }^{\infty }x_{T}^{*}(t-\tau )x_{T}(t)dt\right]e^{-i2\pi f\tau }\ d\tau =\int _{-\infty }^{\infty }R_{xx}(\tau )e^{-i2\pi f\tau }d\tau }](https://wikimedia.org/api/rest_v1/media/math/render/svg/62dc3089e7eba0d870dee75bc70ed54243cb0dba)

![[f_{1},f_{2}]](https://wikimedia.org/api/rest_v1/media/math/render/svg/1ce110719ca89eeeac9a2786c1fce4e86800dd41)

![{\displaystyle {\begin{aligned}P&=\lim _{T\to \infty }{\frac {1}{T}}\int _{-\infty }^{\infty }\left[x_{T}(t)+y_{T}(t)\right]^{*}\left[x_{T}(t)+y_{T}(t)\right]dt\\&=\lim _{T\to \infty }{\frac {1}{T}}\int _{-\infty }^{\infty }|x_{T}(t)|^{2}+x_{T}^{*}(t)y_{T}(t)+y_{T}^{*}(t)x_{T}(t)+|y_{T}(t)|^{2}dt\\\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/0714e881bda14334fc847bdb3a20dc25c7d77b3d)

![{\displaystyle {\begin{aligned}S_{xy}(f)&=\lim _{T\to \infty }{\frac {1}{T}}\left[{\hat {x}}_{T}^{*}(f){\hat {y}}_{T}(f)\right]&S_{yx}(f)&=\lim _{T\to \infty }{\frac {1}{T}}\left[{\hat {y}}_{T}^{*}(f){\hat {x}}_{T}(f)\right]\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/2e03b6cd665eb568fa3d4ecbaba1eb4a88e20b61)

![{\displaystyle {\begin{aligned}S_{xy}(f)&=\int _{-\infty }^{\infty }\left[\lim _{T\to \infty }{\frac {1}{T}}\int _{-\infty }^{\infty }x_{T}^{*}(t-\tau )y_{T}(t)dt\right]e^{-i2\pi f\tau }d\tau =\int _{-\infty }^{\infty }R_{xy}(\tau )e^{-i2\pi f\tau }d\tau \\S_{yx}(f)&=\int _{-\infty }^{\infty }\left[\lim _{T\to \infty }{\frac {1}{T}}\int _{-\infty }^{\infty }y_{T}^{*}(t-\tau )x_{T}(t)dt\right]e^{-i2\pi f\tau }d\tau =\int _{-\infty }^{\infty }R_{yx}(\tau )e^{-i2\pi f\tau }d\tau \end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/4b1244b4ecf27604626c402b8e7b35bd59afa266)

0 Response to "Vertical Integration In A Sentence"

Post a Comment